NodeJS Internals: Event Loop

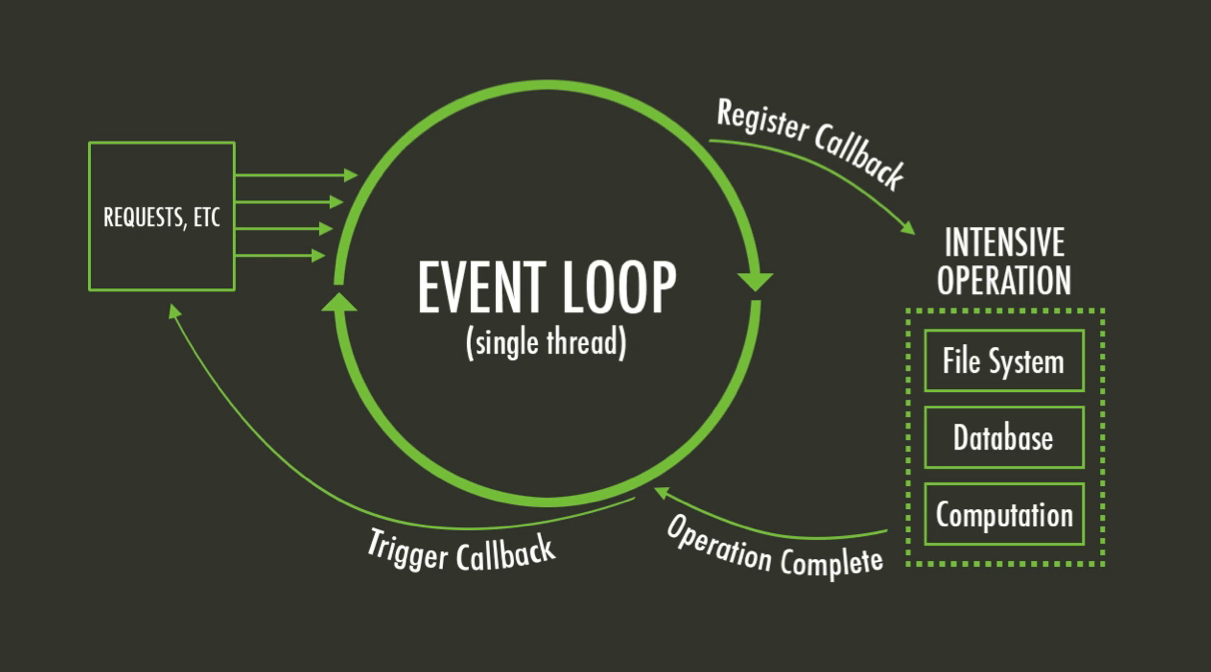

When getting into NodeJS, the first thing that might be a surprise is its single threaded model. Nowadays most OS support multi-core CPU architecture and threading which enables developers to perform multiple operations at the same time. But does NodeJS take advantage of multi-threading or it is really single threaded? How does it perform CPU-bound operations linked to hardware like I/O or other heavy operations like DNS lookup? I will try to give an answer to these questions throughout this article.

The Nature of I/O and CPU Bound Operations

Peripheral devices require a way of communication with CPU to arrange their operations like data transfer etc. The most used modes of achieving such communication are: programmed I/O, interrupt initiated I/O, direct memory access. All of these modes require some kind interruption signal to notify the CPU to continue. This means that a waiting mechanism is needed for the data to be ready and process them chunk by chunk, but can we wait on the main thread? The answer is no because we have just one thread and this will result in a very high impact in performance and thus in scalability. Imagine that at one moment the application is serving 1000 clients and one of them just called an API which needs to perform some I/O by reading a file. At this point, the time needed to read that file will add up to the response time of all connected clients. Such situation can quickly escalate if several clients needs to perform I/O operations at the same time.

CPU-bound operations are also very demanding in CPU processing and they may take a considerable time to complete. During this period of time, the thread is completely busy and cannot process other operations. In this category can be highlighted cryptographic algorithms such as PB2KF or DNS lookup which are very demanding in CPU processing.

NodeJS really needs to offload such kind of operation from the main thread in order to offer the promised scalability. That is achieved by taking the advantage of Libuv.

Libuv

At the core of NodeJS stands Libuv whose main functionality is to provide an engine for offloading heavy operations from the main thread. Here is a description from libuv repository

libuv is cross-platform support library which was originally written for Node.js. It’s designed around the event-driven asynchronous I/O model.

The library provides much more than a simple abstraction over different I/O polling mechanisms: ‘handles’ and ‘streams’ provide a high level abstraction for sockets and other entities; cross-platform file I/O and threading functionality is also provided, amongst other things.

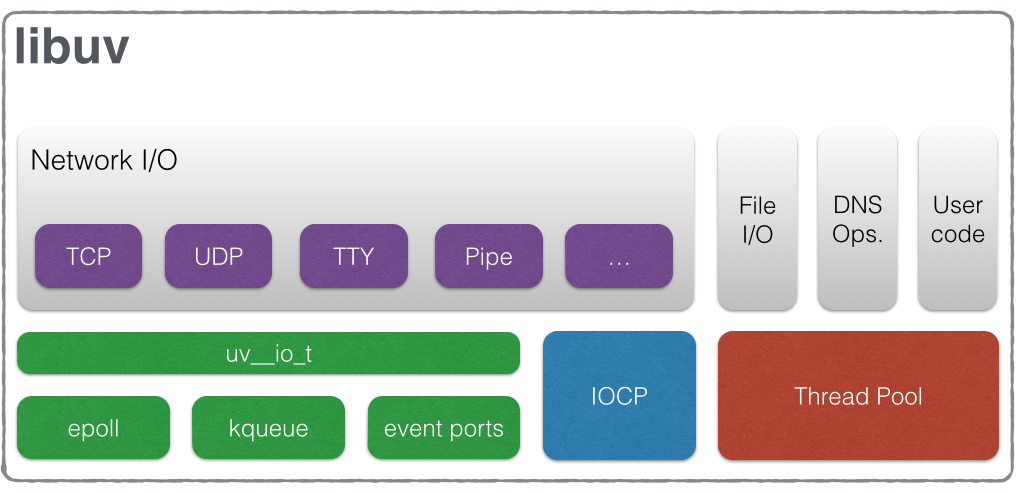

Libuv is a library written in C which implements event loop queues and OS API calls. Let's take a closer look into libuv internals.

Network I/O is performed in the main thread. Libuv takes advantage of asynchronous sockets which are polled using the best mechanism provided by the OS: epoll on Linux, kqueue on MacOS, event ports on SunOS and IOCP on Windows. During the loop iteration, the main thread will block for a specific amount of time waiting for the activity on the sockets which have been added to the poller and respective callbacks will be fired to indicate socket conditions(closed, open, ready to read / write).

On the other hand, functionalities that are synchronous by nature such as file I/O, are processed on a separate thread taken from a global thread pool. The same is done for some very expensive operations related to DNS such as getaddrinfo and getnameinfo.

Event Loop

Event Loop is an abstract concept which is used to model the event-driven architecture of NodeJS. It consist of six phases. Each phase has a dedicated FIFO queue of callbacks which are drained when the loop enters in that given phase.

As illustrated in the following diagram, a loop tick starts with timers, continues clock-wise to execute all phases and finishes with the execution of Close Callback phase. At the end, loop decides if it should continue another tick or exit there depending on the loop running mode(default, once, nowait) and whether there are active handles or not.

Note: As the execution of the loop progresses, more and more callback may be pushed into a given queue. This can block the event loop forever if we make a high number of calls which adds to MicroTasks queue for example. This scenario can happen in the case when we call a Promise recursively. This does not apply to timers which despite of giving them a 0 threshold, the execution of them is delayed to the next loop tick, after which it is rescheduled again.

Timers

A timer specifies the threshold in ms after which its callback will be fired.

The loop starts with the timers phase. A timer's callback is executed as soon as its specified time threshold has passed. However, OS scheduling like process / thread priority or the execution of other callbacks in other phases may delay the execution of a timer. Technically, the polling time in poll phase adds up to the execution delay of a timer.

Pending Callback

After "Timers" phase, the loop enters into "Pending Callbacks" phase. This phase is dedicated for operation which could not be completed during the previous run of the loop. More precisely lets take a TCP socket ECONNREFUSED error as an example. Most operating systems will wait for a time threshold/timeout before reporting such problem and for that reason the throw of this error may be scheduled in the Pending Callback phase.

Idle, Prepare

This phase is completely dedicated for internal usage to libuv. For example, in this phase are initialized libuv handles such as TCP/UDP handles etc. Some handles may need some time to be ready like setting up a TCP connection and this phase is ideal for running post handle initializations.

Poll

This is one of the most important phases which takes care for the processing of I/O operations. It has two important tasks:

- calculate timeout for I/O polling (it cannot wait forever because this will block the event loop)

- drain poll event queue which is populated during the polling

How is the polling timeout calculated? If we poll too much time, timers will be executed late and this impacts negatively their reliability. To understand this we need to dig deep in NodeJS source code at core.c.

int uv_run(uv_loop_t* loop, uv_run_mode mode) {

...

timeout = 0;

if ((mode == UV_RUN_ONCE && !ran_pending) || mode == UV_RUN_DEFAULT)

timeout = uv_backend_timeout(loop);

uv__io_poll(loop, timeout);

...

}

int uv_backend_timeout(const uv_loop_t* loop) {

if (loop->stop_flag != 0)

return 0;

if (!uv__has_active_handles(loop) && !uv__has_active_reqs(loop))

return 0;

if (!QUEUE_EMPTY(&loop->idle_handles))

return 0;

if (!QUEUE_EMPTY(&loop->pending_queue))

return 0;

if (loop->closing_handles)

return 0;

return uv__next_timeout(loop);

}From the given snippet of code, uv_run is the main method which executes all phases. It can be seen that before jumping to the Poll Phase a timeout is calculated by calling uv_backend_timeout. If there are more pressing matters such as processing idle or active handles etc the loop will enter in the Poll phase but it will return early because the timeout is 0. Now for the timers, the polling timeout is calculated by calling uv__next_timeout which gets the nearest timer duration when the timer should be fired and this ensures that timers wont be blocked by polling for I/O.

When the watcher queue is empty, there is no need to wait for the calculated polling timeout because there are no I/O operations registered so the loop will move immediately to the Check phase.

Check Phase

This phase processes setImmediate callback queue. Based on my analysis of the source code, after the first immediate callback is processed, the MicroTask queue is exhausted implicitly as can be seen in the following code snippet by calling runNextTicks:

function processImmediate() {

const queue = outstandingQueue.head !== null ?

outstandingQueue : immediateQueue;

let immediate = queue.head;

// Clear the linked list early in case new `setImmediate()`

// calls occur while immediate callbacks are executed

if (queue !== outstandingQueue) {

queue.head = queue.tail = null;

immediateInfo[kHasOutstanding] = 1;

}

let prevImmediate;

let ranAtLeastOneImmediate = false;

while (immediate !== null) {

if (ranAtLeastOneImmediate)

runNextTicks();

else

ranAtLeastOneImmediate = true;

...

}

}Here it is the definition of runNextTicks where runMicroTasks is invoked.

function runNextTicks() {

if (!hasTickScheduled() && !hasRejectionToWarn())

runMicrotasks();

if (!hasTickScheduled() && !hasRejectionToWarn())

return;

processTicksAndRejections();

}During my study of NodeJS source code I had a hard time figuring out how the MicroTask queue was handled. It seems that they are treated with a high priority after the nextTick callbacks.

Close Callbacks

This phase is dedicated to process callbacks that should be fired after a resource is destroyed abruptly such a socket for example where 'close' is invoked during this phase. However, in normal situations such events are dispatched by using nextTick.

process.nextTick

Technically, nextTick is part of asynchronous NodeJS API. It has a dedicated queue for queuing callbacks called nextTickQueue. This queue is exhausted after the current operation is completed, regardless of the current phase of the event loop.

This API is very powerful for executing logic at a very high priority, even higher than micro tasks. At the same time it should be used with caution as it can starve the event loop as stated in the official docs:

Looking back at our diagram, any time you callprocess.nextTick()in a given phase, all callbacks passed toprocess.nextTick()will be resolved before the event loop continues. This can create some bad situations because it allows you to "starve" your I/O by making recursiveprocess.nextTick()calls, which prevents the event loop from reaching the poll phase.